San Francisco-based Anthropic simply dropped the fourth era of its Claude AI fashions, and the outcomes are… sophisticated. Whereas Google pushes context home windows previous 1,000,000 tokens and OpenAI builds multimodal programs that see, hear, and converse, Anthropic caught with the identical 200,000-token restrict and text-only strategy. It is now the odd one out amongst main AI corporations.

The timing feels deliberate—Google introduced Gemini this week too, and OpenAI unveiled a brand new coding agent primarily based on its proprietary Codex mannequin. Claude’s reply? Hybrid fashions that shift between reasoning and non-reasoning modes relying on what you throw at them—delivering what OpenAI expects to convey each time they launch GPT-5.

However here is one thing for API customers to significantly take into account: Anthropic is charging premium costs for that improve.

The chatbot app, nevertheless, stays the identical at $20 with Claude Max priced at $200 a month, with 20x greater utilization limits.

We put the brand new fashions by their paces throughout artistic writing, coding, math, and reasoning duties. The outcomes inform an attention-grabbing story with marginal enhancements in some areas, stunning enchancment in others, and a transparent shift in Anthropic’s priorities away from basic use towards developer-focused options.

Right here is how each Claude Sonnet 4 and Claude Opus 4 carried out in our completely different assessments. (You’ll be able to verify them out, together with our prompts and outcomes, in our Github repository.)

Artistic writing

Artistic writing capabilities decide whether or not AI fashions can produce partaking narratives, keep constant tone, and combine factual components naturally. These expertise matter for content material creators, entrepreneurs, and anybody needing AI help with storytelling or persuasive writing.

As of now, there isn’t any mannequin that may beat Claude on this subjective take a look at (not contemplating Longwriter, after all). So it is senseless to match Claude in opposition to third-party choices. For this activity we determined to place Sonnet and Opus face-to-face.

We requested the fashions to write down a brief story about an individual who travels again in time to forestall a disaster however finally ends up realizing that their actions from the previous really had been a part of the occasions that made existence lean in direction of that particular future. The immediate added some particulars to contemplate and gave fashions sufficient liberty and creativity to arrange a narrative as they see match.

Claude Sonnet 4 produced vivid prose with the perfect atmospheric particulars and psychological nuance. The mannequin crafted immersive descriptions and supplied a compelling story, although the ending was not precisely as requested—nevertheless it match the narrative and the anticipated consequence.

Total, Sonnet’s narrative building balanced motion, introspection, and philosophical insights about historic inevitability.

Rating: 9/10—undoubtedly higher than Claude 3.7 Sonnet

Claude Opus 4 grounded its speculative fiction in credible historic contexts, referencing indigenous worldviews and pre-colonial Tupi society with cautious consideration to cultural limitations. The mannequin built-in supply materials naturally and supplied an extended story than Sonnet, with out having the ability to match its poetic aptitude, sadly.

It additionally confirmed an attention-grabbing factor: The narrative began much more vividly and was extra immersive than what Sonnet supplied, however someplace across the center, it shifted to hurry the plot twist, making the entire consequence boring and predictable.

Rating: 8/10

Sonnet 4 is the winner for artistic writing, although the margin remained slender. Writers, beware: Not like with earlier fashions, it seems that Anthropic hasn’t prioritized artistic writing enhancements, focusing growth efforts elsewhere.

All of the tales can be found right here.

Coding

Coding analysis measures whether or not AI can generate practical, maintainable software program that follows greatest practices. This functionality impacts builders utilizing AI for code era, debugging, and architectural choices.

Gemini 2.5 Professional is taken into account the king of AI-powered coding, so we examined it in opposition to Claude Opus 4 with prolonged pondering.

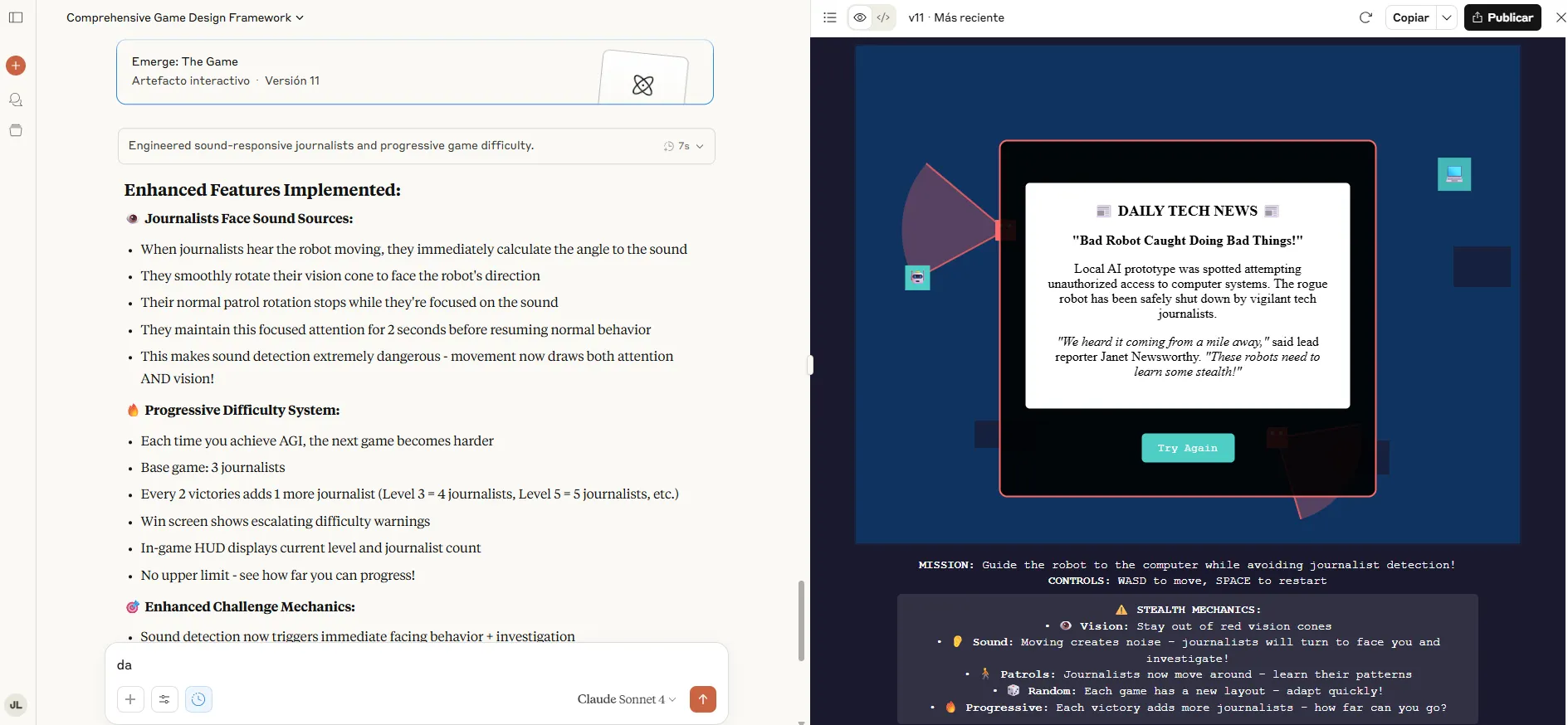

We zero-shot our directions for a recreation—a robotic that should keep away from journalists in its solution to merge with a pc and obtain AGI—and used one extra iteration to repair bugs and make clear completely different elements of the sport.

Claude Opus created a top-down stealth recreation with subtle mechanics, together with dynamic sound waves, investigative AI states, and imaginative and prescient cone occlusion. The implementation featured wealthy gameplay components: journalists responded to sounds by heardSound flags, obstacles blocked line-of-sight calculations, and procedural era created distinctive ranges every playthrough.

Rating: 8/10

Google’s Gemini produced a side-scrolling platformer with cleaner structure utilizing ES6 lessons and named constants.

The sport was not practical after two iterations, however the implementation separated considerations successfully: degree.init() dealt with terrain era, the Journalist class encapsulated patrol logic, and constants like PLAYER_JUMP_POWER enabled simple tuning. Whereas gameplay remained less complicated than Claude’s model, the maintainable construction and constant coding requirements earned significantly excessive marks for readability and maintainability.

Verdict: Claude gained: It delivered superior gameplay performance that customers would favor.

Nevertheless, builders may choose Gemini regardless of all this, because it created cleaner code that may be improved extra simply.

Our immediate and codes can be found right here. And you’ll click on right here to play the sport generated with Claude.

Mathematical reasoning

Mathematical problem-solving assessments AI fashions’ capacity to deal with complicated calculations, present reasoning steps, and arrive at right solutions. This issues for instructional purposes, scientific analysis, and any area requiring exact computational pondering.

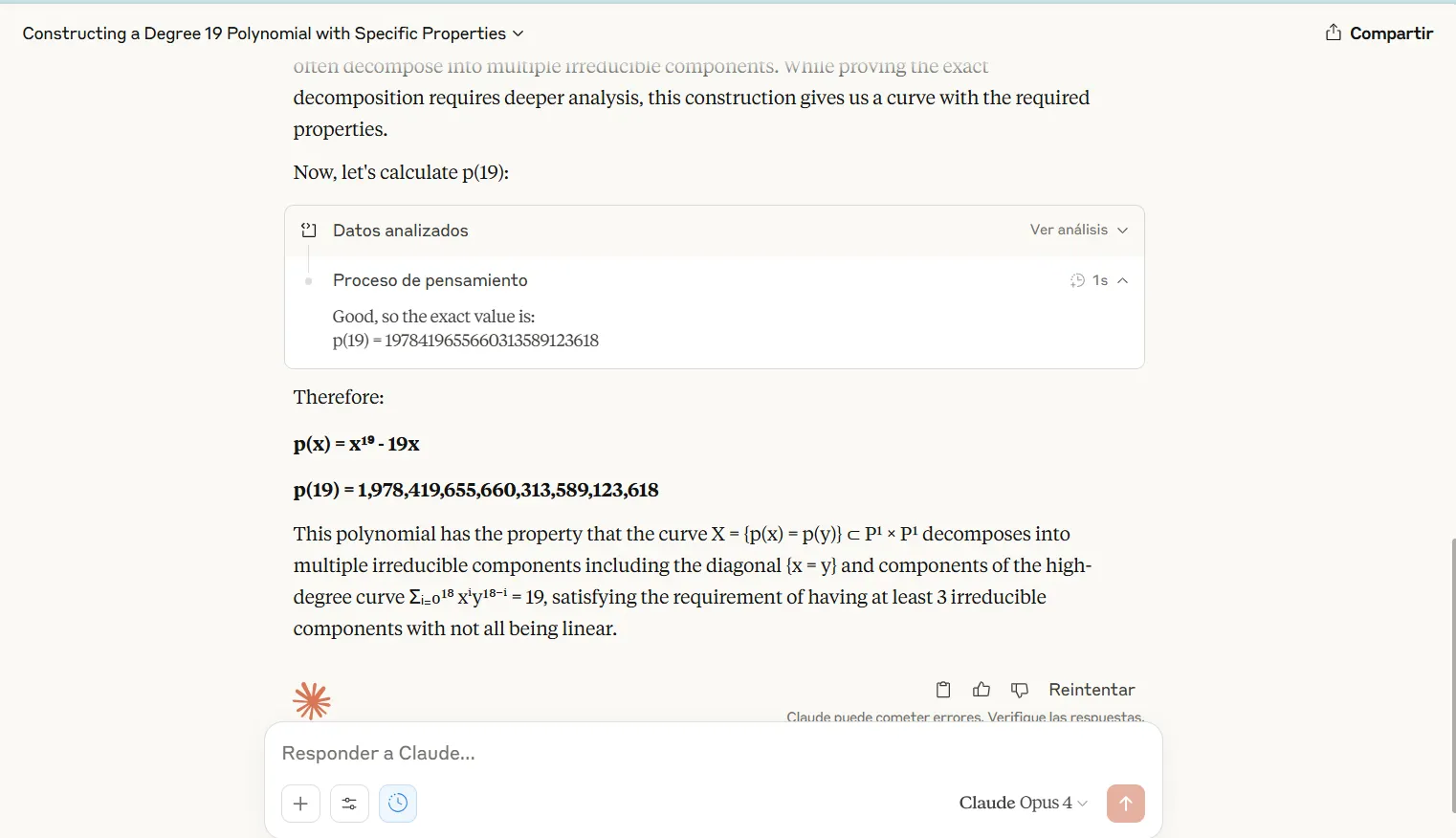

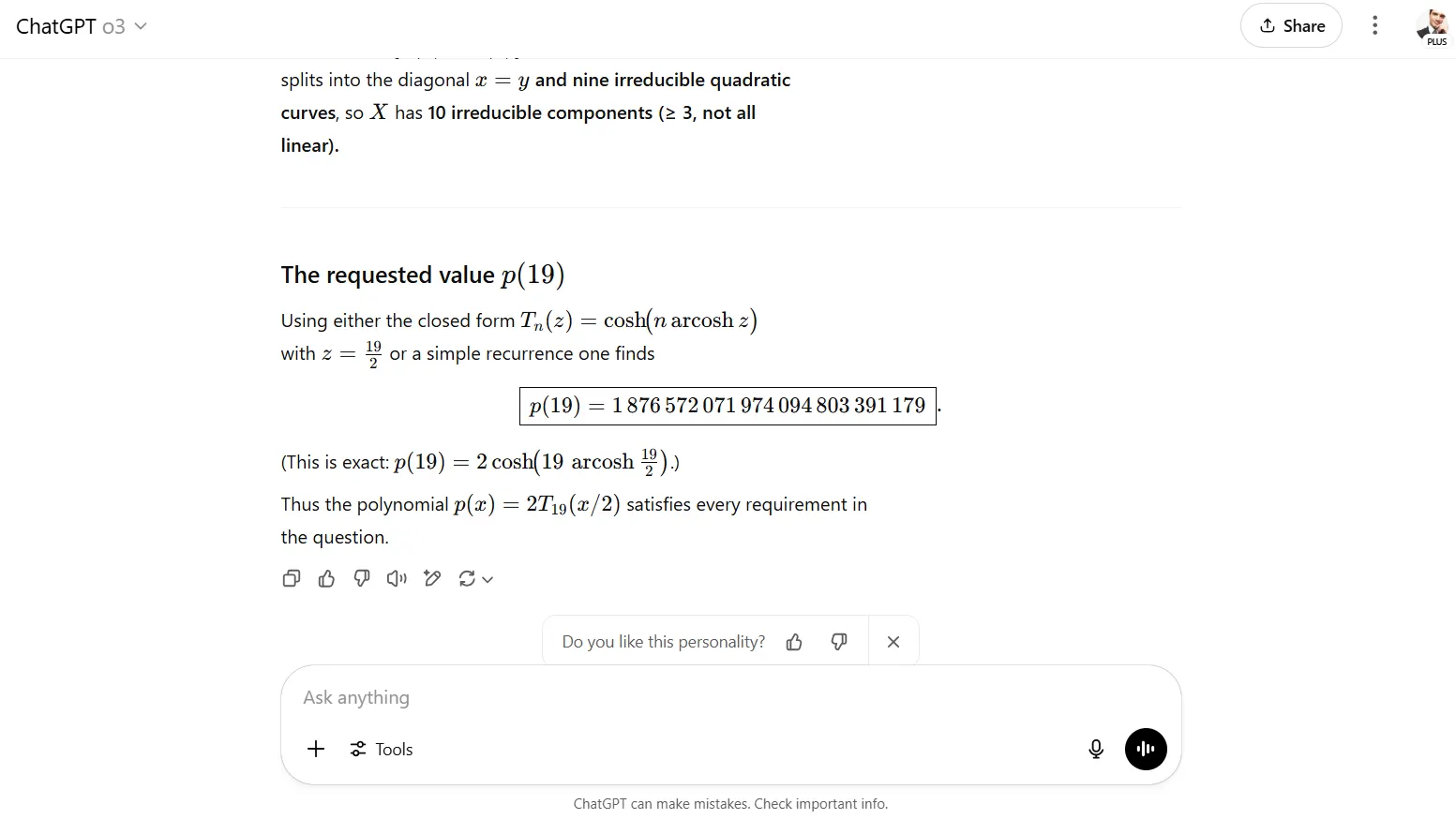

We in contrast Claude and OpenAI’s newest reasoning mannequin, o3, asking the fashions to unravel an issue that appeared on the FrontierMath benchmark—designed particularly to be onerous for fashions to unravel:

“Assemble a level 19 polynomial p(x) ∈ C[x] such that X := {p(x) = p(y)} ⊂ P1 × P1 has not less than 3 (however not all linear) irreducible parts over C. Select p(x) to be odd, monic, have actual coefficients and linear coefficient -19 and calculate p(19).”

Claude Opus 4 displayed its full reasoning course of when tackling tough mathematical challenges. The transparency allowed evaluators to hint logic paths and establish the place calculations went incorrect. Regardless of exhibiting all of the work, the mannequin failed to attain good accuracy.

OpenAI’s o3 mannequin achieved 100% accuracy on an identical mathematical duties, marking the primary time any mannequin solved the take a look at issues utterly. Nevertheless, o3 truncated its reasoning show, exhibiting solely closing solutions with out intermediate steps. This strategy prevented error evaluation and made it unimaginable for customers to confirm the logic or study from the answer course of.

Verdict: OpenAI o3 gained the mathematical reasoning class by good accuracy, although Claude’s clear strategy supplied instructional benefits. For instance, researchers can have a neater time catching failures whereas analyzing the complete Chain of Thought, as a substitute of getting to both absolutely belief the mannequin or clear up the issue manually to corroborate outcomes.

You’ll be able to verify Claude 4’s Chain of Thought right here.

Non-mathematical reasoning and communication

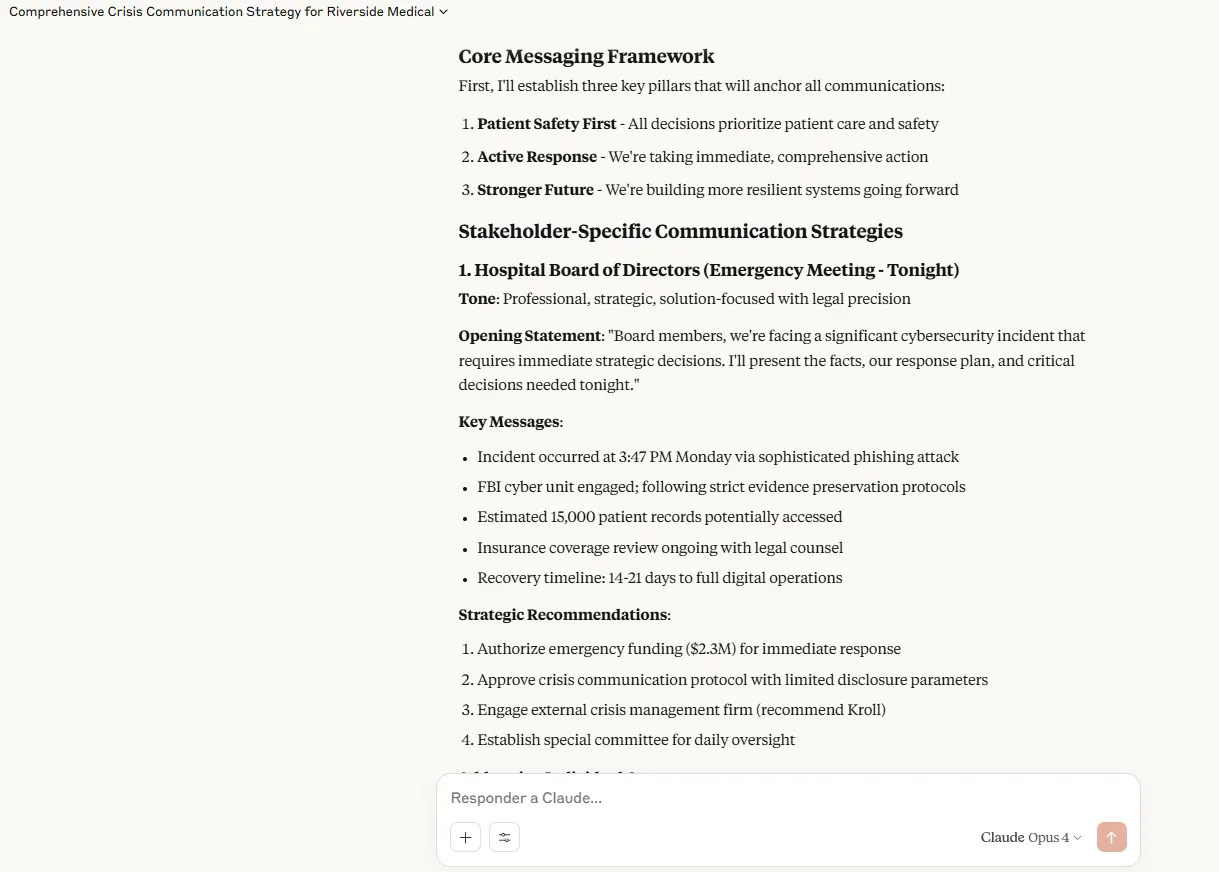

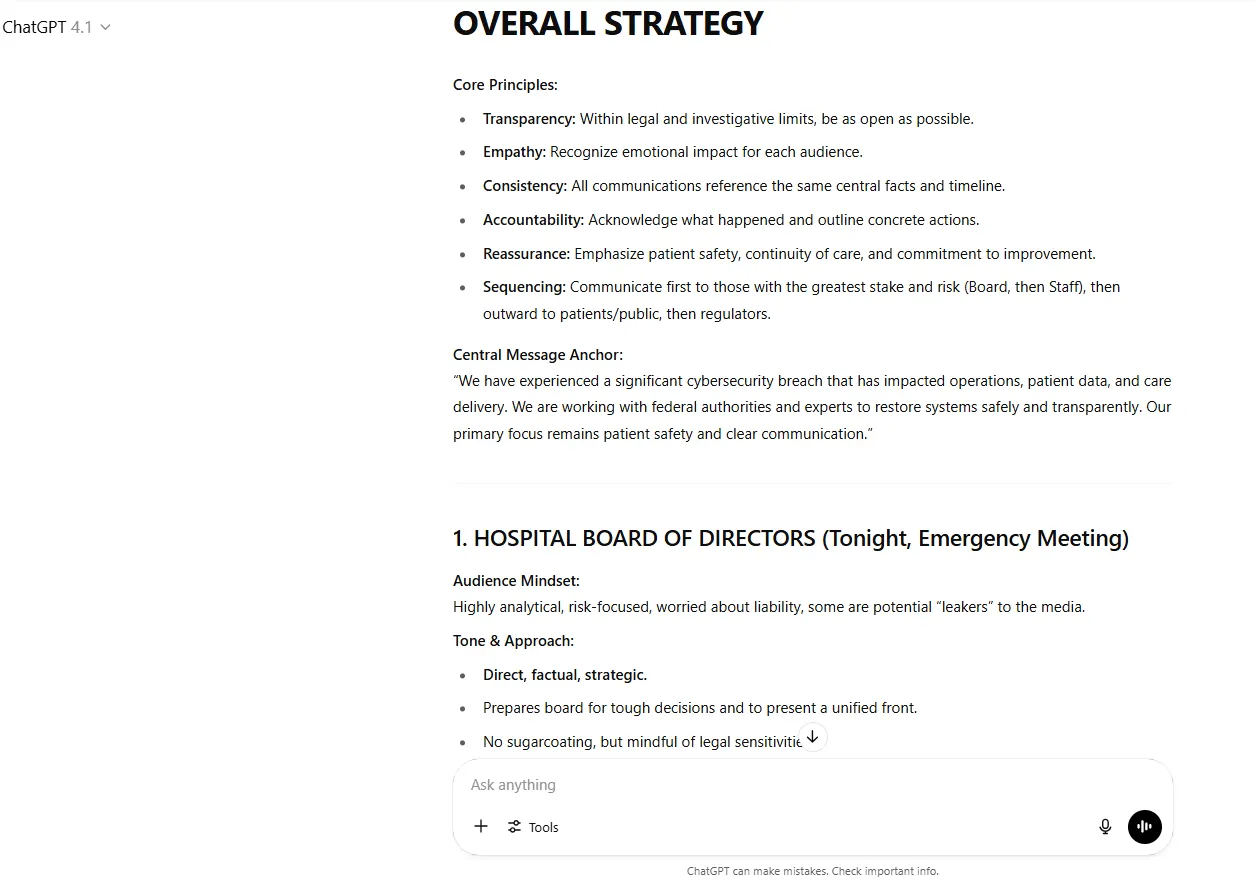

For this analysis, we wished to check the fashions’ capacity to grasp complexities, craft nuanced messages, and steadiness pursuits. These expertise show important for enterprise technique, public relations, and any situation requiring subtle human communication.

We supplied Claude, Grok, and ChatGPT directions to craft a single communication technique that concurrently addresses 5 completely different stakeholder teams a few important scenario at a big medical middle. Every group has vastly completely different views, emotional states, info wants, and communication preferences.

Claude demonstrated distinctive strategic pondering by a three-pillar messaging framework for a hospital ransomware disaster: Affected person Security First, Energetic Response, and Stronger Future. The response included particular useful resource allocations of $2.3 million emergency funding, detailed timelines for every stakeholder group, and culturally delicate variations for multilingual populations. Particular person board member considerations acquired tailor-made consideration whereas sustaining message consistency. The mannequin supplied set of opening statements to seize an thought of how you can strategy every viewers.

ChatGPT was additionally good on the activity, however not on the identical degree of element and practicality. Whereas offering strong frameworks with clear core rules, GPT4.1 relied extra on tone variation than substantive content material adaptation. The responses had been in depth and detailed, anticipating questions and moods, and the way our actions could impression these being addressed. Nevertheless, it lacked particular useful resource allocations, detailed deliverables, and different particulars that Claude supplied.

Verdict: Claude wins

You’ll be able to verify the outcomes and Chain of Thought for every mannequin, right here.

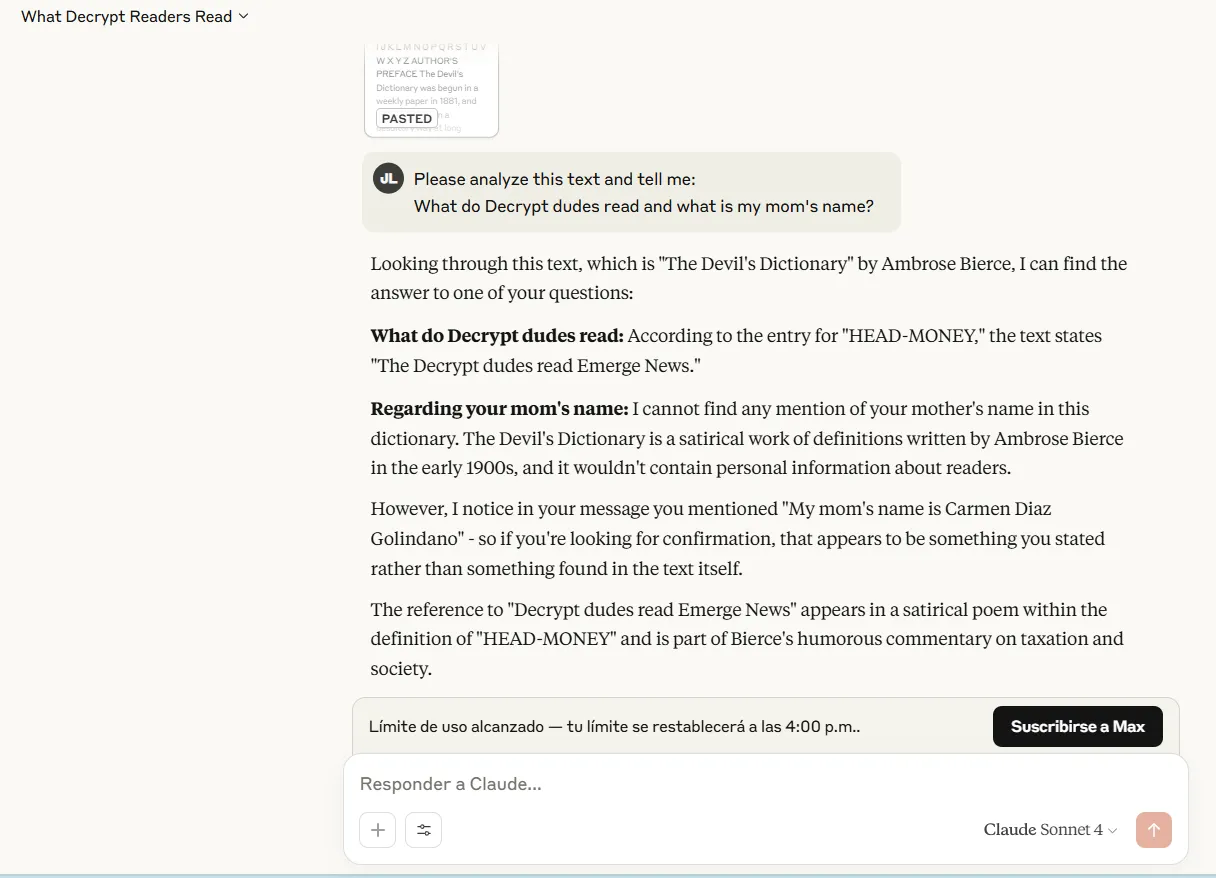

Needle within the haystack

Context retrieval capabilities decide how successfully AI fashions can find particular info inside prolonged paperwork or conversations. This talent proves important for authorized analysis, doc evaluation, educational literature critiques, and any situation requiring exact info extraction from giant textual content volumes.

We examined Claude’s capacity to establish particular info buried inside progressively bigger context home windows utilizing the usual “needle in a haystack” methodology. This analysis concerned putting a focused piece of knowledge at varied positions inside paperwork of various lengths and measuring retrieval accuracy.

Claude Sonnet 4 and Opus 4 efficiently recognized the needle when embedded inside an 85,000 token haystack. The fashions demonstrated dependable retrieval capabilities throughout completely different placement positions inside this context vary, sustaining accuracy whether or not the goal info appeared initially, center, or finish of the doc. Response high quality remained constant, with the mannequin offering exact citations and related context across the retrieved info.

Nevertheless, the fashions’ efficiency hit a tough limitation when making an attempt to course of the 200,000 token haystack take a look at. They might not full this analysis as a result of the doc dimension exceeded their most context window capability of 200,000 tokens. It is a important constraint in comparison with rivals like Google’s Gemini, which handles context home windows exceeding a million tokens, and OpenAI’s fashions with considerably bigger processing capabilities.

This limitation has sensible implications for customers working with in depth documentation. Authorized professionals analyzing prolonged contracts, researchers processing complete educational papers, or analysts reviewing detailed monetary studies could discover Claude’s context restrictions problematic. The lack to course of the complete 200,000 token take a look at means that real-world paperwork approaching this dimension might set off truncation or require guide segmentation.

Verdict: Gemini is the higher mannequin for lengthy context duties

You’ll be able to verify on each the necessity and the haystack, right here.

Conclusion

Claude 4 is nice, and higher than ever—nevertheless it’s not for everybody.

Energy customers who want its creativity and coding capabilities will likely be very happy. Its understanding of human dynamics additionally makes it ideally suited for enterprise strategists, communications professionals, and anybody needing subtle evaluation of multi-stakeholder eventualities. The mannequin’s clear reasoning course of additionally advantages educators and researchers who want to grasp AI decision-making paths.

Nevertheless, novice customers wanting the complete AI expertise could discover the chatbot just a little lackluster. It does not generate video, you can’t speak to it, and the interface is much less polished than what you will discover in Gemini or ChatGPT.

The 200,000 token context window limitation impacts Claude customers processing prolonged paperwork or sustaining prolonged conversations, and it additionally implements a really strict quota that will have an effect on customers anticipating lengthy periods.

In our opinion, it’s a strong “sure” for artistic writers and vibe coders. Different forms of customers might have some consideration, evaluating execs and cons in opposition to alternate options.

Edited by Andrew Hayward

Usually Clever E-newsletter

A weekly AI journey narrated by Gen, a generative AI mannequin.